Very interesting stuff.

The first view.

This could take some time to comprehend in its entirety.

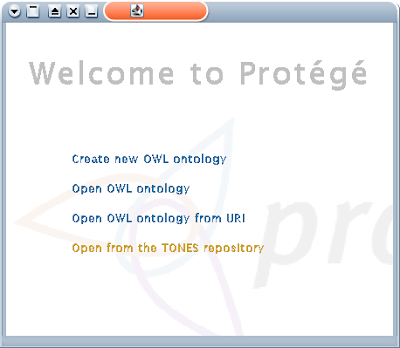

Stanford is developing a package called Protégé with a web site at http://protege.stanford.edu/. As a part of attempting to make matters worse in hopes of making them better I am studying OWL and the ontology of languages and though I had developed my own concept of what those ontologies were, it could help or confuse matters to absorb the perspective of others on the issue. It is always a headache to form a conceptual framework and then adjust to another perspective.

I am downloading Protégé now and it appears to be an attempt to do exactly what I am doing as part of a larger concept of my MitOS and physical instantiation of a ballistic multi-dimensional parallel protein processor. OWL is used in bio-informatics and is applied in many areas of the web. I will report more on what I discover as my understanding progresses. It is likely to be several days before this is familiar. I will likely strip it apart and gather the conceptual basis and include it as a concept method in my applications in a way that I feel is more intuitive and coherent to the central theme of my pursuits. I find these university projects to be clumsy and over reaching in their scale. It is better to have a flexible under frame like boost or a library structure than to integrate too many things as a monument to a single concept, like Sage. It suffers from an attempt to implement too many divergent elements in one package. It would seem it would be much better to have capabilities in a library and combine in various GUIs or in any application.

I will likely drift back and forth to this as I investigate language ontology. It has little utility until the underlying methods are applied in context. Only application will give me an understanding so off the cliff into the data, manuals, and background.

<rdf:RDF xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#" xmlns:foaf="http://xmlns.com/foaf/0.1/" xmlns:dc="http://purl.org/dc/elements/1.1/"> <rdf:Description rdf:about="http://en.wikipedia.org/wiki/Tony_Benn"> <dc:title>Tony Benn</dc:title> <dc:publisher>Wikipedia</dc:publisher> <foaf:primaryTopic> <foaf:Person> <foaf:name>Tony Benn</foaf:name> </foaf:Person> </foaf:primaryTopic> </rdf:Description> </rdf:RDF>

This example from Wikipedia seems to represent the general consensus of what is taking place. Instead of implementing the interface to match the human user, the data and structure is redesigned to fit the computer. That is, IMHO, totally backward. The data exists in the format for human consumption and the path is world->data->human and in this case they have decided to choose data to> obscure and bizarre format to> convolved software to> interface to> structured viewing. I think it is freakish. I can read HTML and perl and even this, but WTF, the data is already there in a human form as it was created. By applying a layer of abstraction with rules of logic that are limited, they have managed to tie their hand to their leg and wave with the other. They have made us all gits. see below from wiki.

git * (mildly derogatory) scumbag, idiot, annoying person (originally meaning illegitimate; from archaic form "get", bastard, which is still used to mean "git" in Northern dialects)

My goal now is to take the whacked up "triples" and associations and make a real model from them. Just because a computer stores stuff, it doesn't have to be stored in the most effecient way. The biggest problem with data is not the lack of it, it is the understanding of it and its relative importance. It seems the zip code of NY is stored with equal significance as the baseball flying toward my head that is about to make me unconscious. I am sorry, this is all wrong and I can't use this method of ontological abstraction to achieve effective results. I can make my program understand a sentence and produce a sentence or image, or voice. So why would I use an intermediary that is clumsy and complex? I want to know who threw the baseball and the ballistic statistics and relationship of cotton producers in Venezuela to the surface construction and their relationship to the mixed double tennis match in Berlin is meaningless and wasteful. The data and its relationships is infinite and you can't relate it all. It is a fools challenge and guaranteed work for the next 101000 years.

My opinion is: They are trying to make the user look stupid and this way the computer appears to be doing useful abstraction. This one is just plain wrong and I will not adopt this method. It would just wreck everything else I have achieved. I am sorry, whoever threw this baseball has a rag arm and if I hadn't seen it coming , I probably would not have noticed it hit me. A content addressable memory would naturally order all this information and this process is doomed to failure. A CAM is inherently content receptive and when they grasp that, this will fade into Vogon lore. I prefer to make my computer understand that when I type "find files that end in .blend with the a whole name that contains dream and flower" it produce the shell script "locate" with the appropriate grep and REGEX. I don't want to speak java or HTML, I like programming, but I don't want to be a program.

0 comments:

Post a Comment